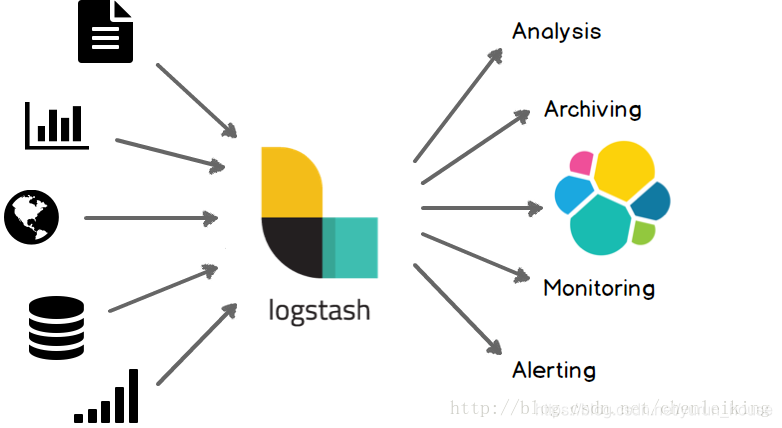

一:logstash概述:

简单来说logstash就是一根具备实时数据传输能力的管道,负责将数据信息从管道的输入端传输到管道的输出端;与此同时这根管道还可以让你根据自己的需求在中间加上滤网,Logstash提供里很多功能强大的滤网以满足你的各种应用场景。

logstash常用于日志系统中做日志采集设备,最常用于ELK中作为日志收集器使用

二:logstash作用:

集中、转换和存储你的数据,是一个开源的服务器端数据处理管道,可以同时从多个数据源获取数据,并对其进行转换,然后将其发送到你最喜欢的“存储

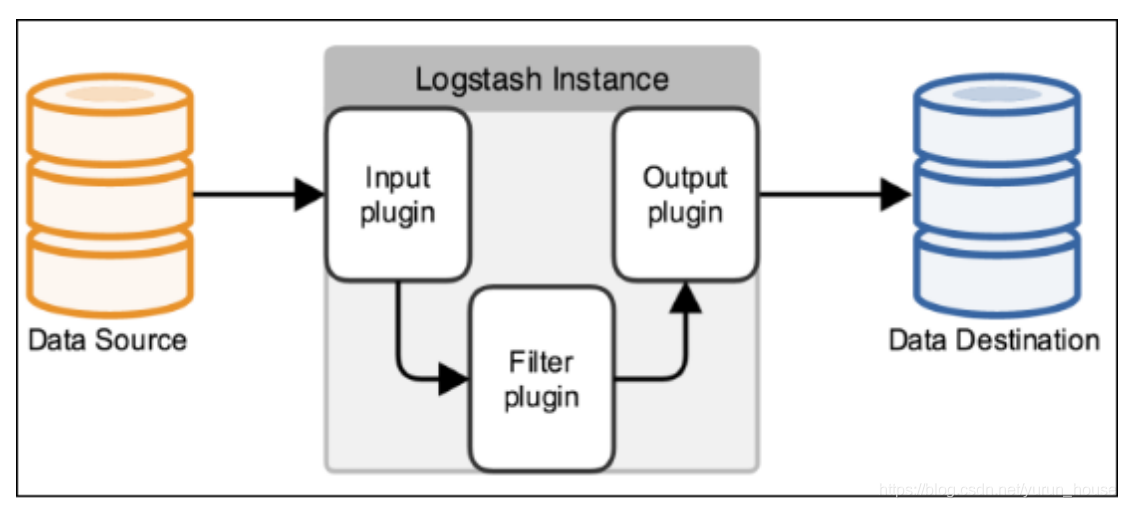

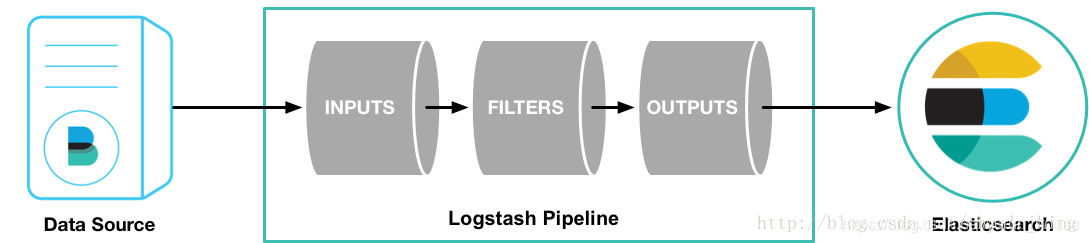

三:logstash的架构:

logstash的基本流程架构:input | filter | output 如需对数据进行额外处理,filter可省略。

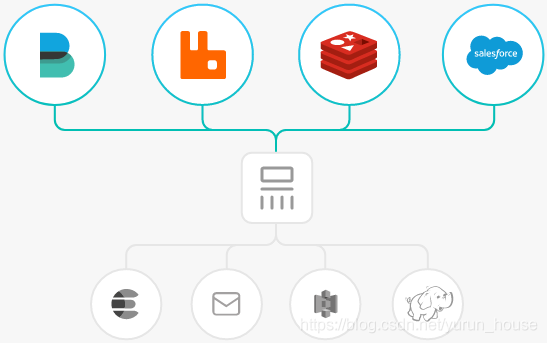

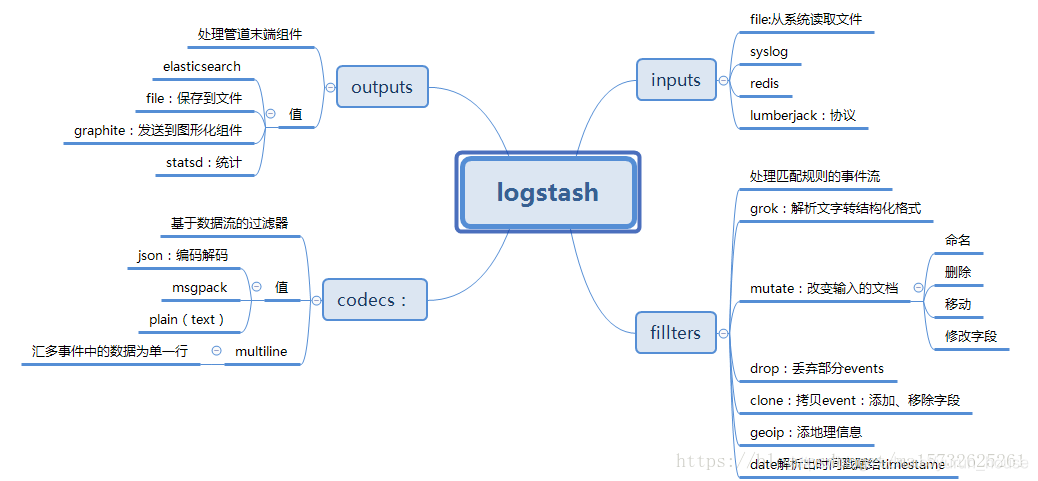

3.1 Input(输入):采集各种样式,大小和相关来源数据,从各个服务器中收集数据。

数据往往以各种各样的形式,或分散或集中地存在于很多系统中。Logstash 支持各种输入选择 ,可以在同一时间从众多常用来源捕捉事件。能够以连续的流式传输方式,轻松地从您的日志、指标、Web 应用、数据存储以及各种 AWS 服务采集数据。

3.2 Filter(过滤器)

用于在将event通过output发出之前对其实现某些处理功能。grok。

grok:用于分析结构化文本数据。目前 是logstash中将非结构化数据日志数据转化为结构化的可查询数据的不二之选

[root@node1 ~]# rpm -ql logstash | grep "patterns$" grok定义模式结构化的位置。

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/logstash-patterns-core-4.1.2/patterns/grok-patterns

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/logstash-patterns-core-4.1.2/patterns/mcollective-patterns

[root@node1 ~]#

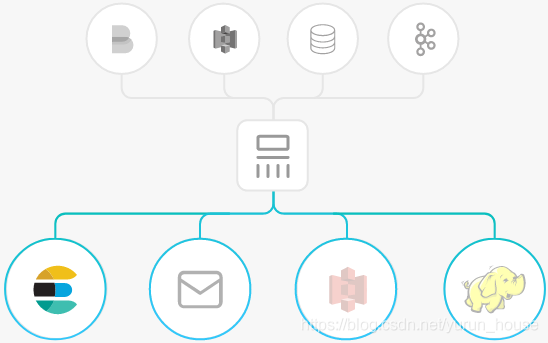

3.3 Output(输出):将我们过滤出的数据保存到那些数据库和相关存储中,。

3.4 总结:

inpust:必须,负责产生事件(Inputs generate events),常用:File、syslog、redis、beats(如:Filebeats)

filters:可选,负责数据处理与转换(filters modify them),常用:grok、mutate、drop、clone、geoip

outpus:必须,负责数据输出(outputs ship them elsewhere),常用:elasticsearch、file、graphite、statsd

四:安装logstash环境。依据rpm包进行下载。

准备了四个节点,实验备用;

| 节点 | ip |

|---|---|

| 192.168.126.128 | node1 |

| 192.168.126.129 | node2 |

| 192.168.126.130 | node3 |

| 192.168.126.131 | node4 |

[root@node1 ~]# rpm -ivh logstash-7.9.1.rpm

warning: logstash-7.9.1.rpm: Header V4 RSA/SHA512 Signature, key ID d88e42b4: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:logstash-1:7.9.1-1 ################################# [100%]

Using provided startup.options file: /etc/logstash/startup.options

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/pleaserun-0.0.31/lib/pleaserun/platform/base.rb:112: warning: constant ::Fixnum is deprecated

Successfully created system startup script for Logstash

[root@node1 ~]# vim /etc/profile.d/logstash.sh 添加可执行文件路径

export PATH=$PATH:/usr/share/logstash/bin

[root@node1 ~]# source /etc/profile.d/logstash.sh

[root@node1 ~]# java -version 基于logstash是jruby语言编写,即需要java环境。

openjdk version "1.8.0_262"

OpenJDK Runtime Environment (build 1.8.0_262-b10)

OpenJDK 64-Bit Server VM (build 25.262-b10, mixed mode)

五:logstash的工作流程。

input {

从哪个地方读取,输入数据。

}

filter {

依据grok模式对数据进行分析结构化

}

output {

将分析好的数据输出存储到哪些地方

}

实例一:我们以标准输入,来输出数据。

[root@node1 ~]# cd /etc/logstash/conf.d/ 默认logstash的配制文件在这个目录下

[root@node1 conf.d]# ls

[root@node1 conf.d]# vim shil.conf

input {

stdin {

标准输入

}

}

output {

stdout {

标准输入

codec => rubydebug 编码格式ruby

}

}

[root@node1 conf.d]# logstash -f /etc/logstash/conf.d/shil.conf --config.debug 使用--config.debug进行验证配置是否有错误

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[WARN ] 2020-10-13 13:11:00.251 [main] runner - --config.debug was specified, but log.level was not set to 'debug'! No config info will be logged.

[INFO ] 2020-10-13 13:11:00.261 [main] runner - Starting Logstash {

"logstash.version"=>"7.9.1", "jruby.version"=>"jruby 9.2.13.0 (2.5.7) 2020-08-03 9a89c94bcc OpenJDK 64-Bit Server VM 25.262-b10 on 1.8.0_262-b10 +indy +jit [linux-x86_64]"}

[INFO ] 2020-10-13 13:11:00.319 [main] writabledirectory - Creating directory {

:setting=>"path.queue", :path=>"/usr/share/logstash/data/queue"}

[INFO ] 2020-10-13 13:11:00.340 [main] writabledirectory - Creating directory {

:setting=>"path.dead_letter_queue", :path=>"/usr/share/logstash/data/dead_letter_queue"}

[WARN ] 2020-10-13 13:11:00.803 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified

[INFO ] 2020-10-13 13:11:00.845 [LogStash::Runner] agent - No persistent UUID file found. Generating new UUID {

:uuid=>"593d27c7-7f01-4bbc-a68c-e60c555d2f73", :path=>"/usr/share/logstash/data/uuid"}

[INFO ] 2020-10-13 13:11:02.670 [Converge PipelineAction::Create<main>] Reflections - Reflections took 44 ms to scan 1 urls, producing 22 keys and 45 values

[INFO ] 2020-10-13 13:11:03.627 [[main]-pipeline-manager] javapipeline - Starting pipeline {

:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>250, "pipeline.sources"=>["/etc/logstash/conf.d/shil.conf"], :thread=>"#<Thread:0x758c4715 run>"}

[INFO ] 2020-10-13 13:11:04.503 [[main]-pipeline-manager] javapipeline - Pipeline Java execution initialization time {

"seconds"=>0.87}

[INFO ] 2020-10-13 13:11:04.567 [[main]-pipeline-manager] javapipeline - Pipeline started {

"pipeline.id"=>"main"}

The stdin plugin is now waiting for input:

[INFO ] 2020-10-13 13:11:04.682 [Agent thread] agent - Pipelines running {

:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[INFO ] 2020-10-13 13:11:04.935 [Api Webserver] agent - Successfully started Logstash API endpoint {

:port=>9600}

[root@node1 conf.d]# logstash -f /etc/logstash/conf.d/shil.conf --config.debug

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[WARN ] 2020-10-13 13:11:00.251 [main] runner - --config.debug was specified, but log.level was not set to 'debug'! No config info will be logged.

[INFO ] 2020-10-13 13:11:00.261 [main] runner - Starting Logstash {

"logstash.version"=>"7.9.1", "jruby.version"=>"jruby 9.2.13.0 (2.5.7) 2020-08-03 9a89c94bcc OpenJDK 64-Bit Server VM 25.262-b10 on 1.8.0_262-b10 +indy +jit [linux-x86_64]"}

[INFO ] 2020-10-13 13:11:00.319 [main] writabledirectory - Creating directory {

:setting=>"path.queue", :path=>"/usr/share/logstash/data/queue"}

[INFO ] 2020-10-13 13:11:00.340 [main] writabledirectory - Creating directory {

:setting=>"path.dead_letter_queue", :path=>"/usr/share/logstash/data/dead_letter_queue"}

[WARN ] 2020-10-13 13:11:00.803 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified

[INFO ] 2020-10-13 13:11:00.845 [LogStash::Runner] agent - No persistent UUID file found. Generating new UUID {

:uuid=>"593d27c7-7f01-4bbc-a68c-e60c555d2f73", :path=>"/usr/share/logstash/data/uuid"}

[INFO ] 2020-10-13 13:11:02.670 [Converge PipelineAction::Create<main>] Reflections - Reflections took 44 ms to scan 1 urls, producing 22 keys and 45 values

[INFO ] 2020-10-13 13:11:03.627 [[main]-pipeline-manager] javapipeline - Starting pipeline {

:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>250, "pipeline.sources"=>["/etc/logstash/conf.d/shil.conf"], :thread=>"#<Thread:0x758c4715 run>"}

[INFO ] 2020-10-13 13:11:04.503 [[main]-pipeline-manager] javapipeline - Pipeline Java execution initialization time {

"seconds"=>0.87}

[INFO ] 2020-10-13 13:11:04.567 [[main]-pipeline-manager] javapipeline - Pipeline started {

"pipeline.id"=>"main"}

The stdin plugin is now waiting for input:

[INFO ] 2020-10-13 13:11:04.682 [Agent thread] agent - Pipelines running {

:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[INFO ] 2020-10-13 13:11:04.935 [Api Webserver] agent - Successfully started Logstash API endpoint {

:port=>9600}

^C[WARN ] 2020-10-13 13:16:23.243 [SIGINT handler] runner - SIGINT received. Shutting down.

[INFO ] 2020-10-13 13:16:24.389 [Converge PipelineAction::Stop<main>] javapipeline - Pipeline terminated {

"pipeline.id"=>"main"}

^C[FATAL] 2020-10-13 13:16:24.429 [SIGINT handler] runner - SIGINT received. Terminating immediately..

[ERROR] 2020-10-13 13:16:24.490 [LogStash::Runner] Logstash - org.jruby.exceptions.ThreadKill

[root@node1 conf.d]# logstash -f /etc/logstash/conf.d/shil.conf

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[INFO ] 2020-10-13 13:16:44.536 [main] runner - Starting Logstash {

"logstash.version"=>"7.9.1", "jruby.version"=>"jruby 9.2.13.0 (2.5.7) 2020-08-03 9a89c94bcc OpenJDK 64-Bit Server VM 25.262-b10 on 1.8.0_262-b10 +indy +jit [linux-x86_64]"}

[WARN ] 2020-10-13 13:16:44.920 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified

[INFO ] 2020-10-13 13:16:46.360 [Converge PipelineAction::Create<main>] Reflections - Reflections took 37 ms to scan 1 urls, producing 22 keys and 45 values

[INFO ] 2020-10-13 13:16:47.108 [[main]-pipeline-manager] javapipeline - Starting pipeline {

:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>250, "pipeline.sources"=>["/etc/logstash/conf.d/shil.conf"], :thread=>"#<Thread:0x4df75091 run>"}

[INFO ] 2020-10-13 13:16:47.839 [[main]-pipeline-manager] javapipeline - Pipeline Java execution initialization time {

"seconds"=>0.73}

[INFO ] 2020-10-13 13:16:47.900 [[main]-pipeline-manager] javapipeline - Pipeline started {

"pipeline.id"=>"main"}

The stdin plugin is now waiting for input:

[INFO ] 2020-10-13 13:16:48.010 [Agent thread] agent - Pipelines running {

:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[INFO ] 2020-10-13 13:16:48.227 [Api Webserver] agent - Successfully started Logstash API endpoint {

:port=>9600}

hello world 我们输入一些字段。

{

"host" => "node1", 当前主机

"message" => "hello world", 发布的消息

"@version" => "1", 版本号

"@timestamp" => 2020-10-13T06:08:07.476Z

}

实例二:我们通过grok来对日志进行分析,读取,标准输出。

2.1我们自定义gork模式对日志进行过滤。

语法格式:

%{

SYNTAX:SEMANTIC}

SYNTAX:预定义模式名称;

SEMANTIC:匹配到的文本的自定义标识符;

[root@node1 conf.d]# vim groksimple.conf

input {

stdin {

}

}

filter {

grok {

match => {

"message" => "%{IP:clientip} %{WORD:method} %{URIPATHPARAM:request} %{NUMBER:bytes} %{NUMBER:duration}" }

}

}

output {

stdout {

codec => rubydebug

}

}

[root@node1 conf.d]# logstash -f /etc/logstash/conf.d/groksimple.conf

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[INFO ] 2020-10-13 14:29:41.936 [main] runner - Starting Logstash {

"logstash.version"=>"7.9.1", "jruby.version"=>"jruby 9.2.13.0 (2.5.7) 2020-08-03 9a89c94bcc OpenJDK 64-Bit Server VM 25.262-b10 on 1.8.0_262-b10 +indy +jit [linux-x86_64]"}

[WARN ] 2020-10-13 14:29:42.412 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified

[INFO ] 2020-10-13 14:29:44.025 [Converge PipelineAction::Create<main>] Reflections - Reflections took 42 ms to scan 1 urls, producing 22 keys and 45 values

[INFO ] 2020-10-13 14:29:44.995 [[main]-pipeline-manager] javapipeline - Starting pipeline {

:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>250, "pipeline.sources"=>["/etc/logstash/conf.d/groksimple.conf"], :thread=>"#<Thread:0x4ca2f74b run>"}

[INFO ] 2020-10-13 14:29:45.749 [[main]-pipeline-manager] javapipeline - Pipeline Java execution initialization time {

"seconds"=>0.74}

[INFO ] 2020-10-13 14:29:45.820 [[main]-pipeline-manager] javapipeline - Pipeline started {

"pipeline.id"=>"main"}

The stdin plugin is now waiting for input:

[INFO ] 2020-10-13 14:29:45.902 [Agent thread] agent - Pipelines running {

:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[INFO ] 2020-10-13 14:29:46.098 [Api Webserver] agent - Successfully started Logstash API endpoint {

:port=>9600}

1.1.1.1 get /index.html 30 0.23 我们标准输入一些日志信息。

{

"@timestamp" => 2020-10-13T06:30:11.973Z,

"host" => "node1",

"@version" => "1",

"request" => "/index.html",

"message" => "1.1.1.1 get /index.html 30 0.23",

"duration" => "0.23",

"clientip" => "1.1.1.1",

"method" => "get",

"bytes" => "30"

}

实例三:将一些webserver服务器产生的日志进行过滤标准输出

例如:apache产生的日志,在grok中有特定的过滤apache日志的结构。

[root@node1 conf.d]# vim httpdsimple.conf

input {

file {

从哪个文件中获取

path => ["/var/log/httpd/access_log"] 文件路径

type => "apachelog" 文件类型

start_position => "beginning" 从最开始取数据

}

}

filter {

grok {

过滤分析格式

match => {

"message" => "%{COMBINEDAPACHELOG}"} 过滤httpd日志格式。

}

}

output {

stdout {

codec => rubydebug

}

}

[root@node4 conf.d]# logstash -f /etc/logstash/conf.d/httpd.conf --path.data=/tmp

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[INFO ] 2020-10-13 17:57:53.743 [main] runner - Starting Logstash {

"logstash.version"=>"7.9.1", "jruby.version"=>"jruby 9.2.13.0 (2.5.7) 2020-08-03 9a89c94bcc OpenJDK 64-Bit Server VM 25.262-b10 on 1.8.0_262-b10 +indy +jit [linux-x86_64]"}

[INFO ] 2020-10-13 17:57:53.803 [main] writabledirectory - Creating directory {

:setting=>"path.queue", :path=>"/tmp/queue"}

[INFO ] 2020-10-13 17:57:53.819 [main] writabledirectory - Creating directory {

:setting=>"path.dead_letter_queue", :path=>"/tmp/dead_letter_queue"}

[WARN ] 2020-10-13 17:57:54.315 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified

[INFO ] 2020-10-13 17:57:54.352 [LogStash::Runner] agent - No persistent UUID file found. Generating new UUID {

:uuid=>"99ab593e-1436-49c0-874a-e815644cb316", :path=>"/tmp/uuid"}

[INFO ] 2020-10-13 17:57:56.722 [Converge PipelineAction::Create<main>] Reflections - Reflections took 51 ms to scan 1 urls, producing 22 keys and 45 values

[INFO ] 2020-10-13 17:57:58.642 [[main]-pipeline-manager] javapipeline - Starting pipeline {

:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>250, "pipeline.sources"=>["/etc/logstash/conf.d/httpd.conf"], :thread=>"#<Thread:0x7878416d run>"}

[INFO ] 2020-10-13 17:57:59.663 [[main]-pipeline-manager] javapipeline - Pipeline Java execution initialization time {

"seconds"=>1.01}

[INFO ] 2020-10-13 17:58:00.166 [[main]-pipeline-manager] file - No sincedb_path set, generating one based on the "path" setting {

:sincedb_path=>"/tmp/plugins/inputs/file/.sincedb_15940cad53dd1d99808eeaecd6f6ad3f", :path=>["/var/log/httpd/access_log"]}

[INFO ] 2020-10-13 17:58:00.198 [[main]-pipeline-manager] javapipeline - Pipeline started {

"pipeline.id"=>"main"}

[INFO ] 2020-10-13 17:58:00.316 [Agent thread] agent - Pipelines running {

:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[INFO ] 2020-10-13 17:58:00.356 [[main]<file] observingtail - START, creating Discoverer, Watch with file and sincedb collections

[INFO ] 2020-10-13 17:58:00.852 [Api Webserver] agent - Successfully started Logstash API endpoint {

:port=>9603}

在浏览器直接访问10.5.100.183

{

"@timestamp" => 2020-10-13T10:01:02.347Z,

"message" => "- - - [13/Oct/2020:18:01:01 +0800] \"GET / HTTP/1.1\" 304 - \"-\" \"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36\"",

"@version" => "1",

"tags" => [

[0] "_grokparsefailure"

],

"path" => "/var/log/httpd/access_log",

"type" => "apachelog",

"host" => "node4"

}

{

"@timestamp" => 2020-10-13T10:01:02.407Z,

"message" => "- - - [13/Oct/2020:18:01:01 +0800] \"GET /favicon.ico HTTP/1.1\" 404 209 \"http://10.5.100.183/\" \"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36\"",

"@version" => "1",

"tags" => [

[0] "_grokparsefailure"

],

"path" => "/var/log/httpd/access_log",

"type" => "apachelog",

"host" => "node4"

}

logstash的工作流程我们完整的走了一遍,从input—>filter—>output依次进行。更多内容在下篇如何实现

读取httpd日志—>存储在redis—>读取redis数据—>存储在elasticsearch中。

菜鸟笔记

菜鸟笔记