报错如下:

java.net.ConnectException: Call From V_LZ/192.168.53.1 to hadoop2:8020 failed on connection exception: java.net.ConnectException: Connection refused: no further information; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:791)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:731)

at org.apache.hadoop.ipc.Client.call(Client.java:1472)

at org.apache.hadoop.ipc.Client.call(Client.java:1399)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:232)

at com.sun.proxy.$Proxy9.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:752)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:187)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy10.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:1988)

at org.apache.hadoop.hdfs.DistributedFileSystem$18.doCall(DistributedFileSystem.java:1118)

at org.apache.hadoop.hdfs.DistributedFileSystem$18.doCall(DistributedFileSystem.java:1114)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1114)

at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1400)

at com.lens.task.HdfsOperate.main(HdfsOperate.java:22)

Caused by: java.net.ConnectException: Connection refused: no further information

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:530)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:494)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:607)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:705)

at org.apache.hadoop.ipc.Client$Connection.access$2800(Client.java:368)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1521)

at org.apache.hadoop.ipc.Client.call(Client.java:1438)

... 18 more

Process finished with exit code 0

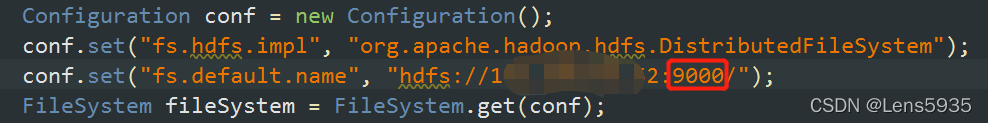

原因:端口不一致,客户端无法访问服务端

解决方法:把客户端的端口改成服务端一致的端口

ok,大功告成 。

8020端口:

8020端口在Hadoop1.x中默认承担着Namenode 和 Datanode之间的心跳通信,也兼顾FileSystem默认的端口号(Hdfs客户端访问Hdfs集群的RPC通信端口),

但是在Hadoop2.x中,8020只承担了namenode 和 datanode之间的心跳通信,当然这些端口的设置是指的默认设置。

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop01:8020</value>

</property>9000端口:

9000端口是在Hadoop2.x中将FileSystem通讯端口拆分出来了,默认为9000,在Hadoop2.x中我们可以在hdfs-size.xml中进行配置。

50070端口:

50070端口是httpService访问端口,供于浏览器进行访问Namenode节点,监控其各个Datanode的服务端口,同样在Hadoop2.x中我们可以在hdfs-size.xml中进行配置。

菜鸟笔记

菜鸟笔记